Distributed Optimization and Control

NREL is working to advance foundational science and translate advances in distributed optimization and control into breakthrough approaches for integrating sustainable and distributed infrastructures into our energy systems.

The electric power system is evolving toward a massively distributed infrastructure with millions of controllable nodes. Its future operational landscape will be markedly different from existing operations, in which power generation is concentrated at a few large fossil-fuel power plants, use of renewable generation and storage is relatively rare, and loads typically operate in open-loop fashion.

Current research and development efforts aim to leverage advances in optimization and control to develop distributed control frameworks for next-generation power systems that ensure stability, preserve reliability, and meet economic objectives and customer preferences.

Capabilities

- Distributed optimization and control

- Online optimization and learning

- Stochastic optimization

- Data-driven optimization and control

- Dynamics and stability

- Cyber-physical systems

- Grid-interactive buildings

- Numerical simulations and hardware-in-the-loop experiments

Projects

Grid resilience and security are critical to power and energy systems, yet increasing weather-related disasters and cyberattacks underscore the vulnerabilities of our electric grid. This project advances the foundational science of power and energy systems resilience and develops a theoretical and algorithmic framework for real-time resilience of highly distributed energy systems. The goal is to represent a complex energy system and steer it through a disruption event while minimizing the effect of the disruption and the recovery time. The approach leverages foundational science in adaptive and robust control theory, artificial intelligence and machine learning algorithms for control, graph theory, and uncertain dynamical systems.

Learn more in Ripple-Type Control for Enhancing Resilience of Networked Physical Systems.

This project advances the science behind asynchronous control and monitoring of power systems, with a particular focus on distribution grids. Distribution grids are inexorably changing at the edge, where a massive number of distributed energy resources, smart meters, and intelligent sensors and actuators—broadly referred to as Internet of Things-enabled devices—are being deployed. Thus, distribution grids are evolving from passive to highly distributed active networks capable of performing advanced tasks and enhancing robustness and resilience. This project will produce innovative asynchronous control, optimization, and estimation schemes that are grounded on solid analytical foundations. The benefit of the proposed algorithms will be shown through extensive simulations that leverage the autonomous energy systems simulation framework.

Learn more in Online State Estimation for Systems with Asynchronous Sensors.

Reinforcement learning results in near-optimal decision-making in unknown environments by leveraging past experience. Significant progress has been made in this field; however, its applicability to controlling highly distributed, large-scale physical systems is limited. The reasons are:

- The inadequacy of reinforcement learning approaches to perform in partially observable control spaces

- Their inability to satisfy system physical and engineering constraints while pursuing operational objectives.

This project will tackle decentralized control and coordination tasks in highly distributed infrastructure systems such as power grids. The devised decentralized learning techniques will produce accurate and robust methods that represent a leap toward human out-of-the-loop decision-making. The solutions will maximize the value of contemporary energy systems and allow for optimal and scalable use of resources.

This reinforces NREL's integrated energy pathways critical objective by realizing cost-effective and resilient energy system management technologies.

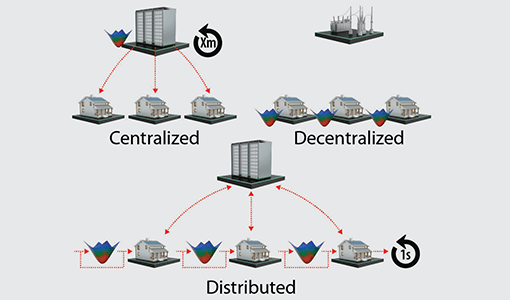

The state of the art on microgrid operation typically considers a flat and static partition of the power system into microgrids that are coordinated via either centralized or distributed control algorithms. This approach works well on small- to medium-size systems under normal or static operating conditions. However, it becomes infeasible when the number of controllable distributed energy resources approaches millions. Moreover, the static partition into microgrids is inadequate from the resilience perspective. Indeed, a partition that is best for normal operation might be disastrous during major disruptions. And vice-versa: A partition that is best during disruptions might be suboptimal during normal operation. Moreover, even during normal operation, the optimal partition may change over time (e.g., because large numbers of electric vehicles dynamically change the loading conditions in the network). This calls for dynamic microgrid formation with a multiresolution control structure, laying the foundation for the vision of a fractal grid.

In this framework, microgrids self-optimize when isolated from the main grid and participate in optimal operation when interconnected to the main grid using distributed control methods. We adaptively define the boundaries of microgrids in real time based on operating conditions. In particular, when a disruption is identified:

- A new partition within each layer of hierarchy is established via distributed communications and control algorithms while adhering to the priority of critical loads and availability of communication systems

- Distributed control algorithms are applied to prevent the system from collapse.

Once collapse is prevented and the disruption is cleared, the system will gradually return to normal operation by reconfiguring the microgrid partition to an optimal configuration and reconnecting the microgrids that disconnected during the disruption.

By leveraging deep reinforcement learning paradigms and the high-performance computing capability at NREL, this project develops an innovative and scalable multi-objective deep reinforcement learning control (MODRLC) system for building control that can simultaneously address building-centric, grid-serving, and resilience objectives. Unlike existing grid-interactive building controllers, MODRLC does not require expensive, on-demand computation during real-time control, and it avoids building-by-building customization of dynamics modeling. Both features can reduce implementation costs (i.e., hardware and building modeling labor costs) in real-life applications and thus enable wide adoption of this technology. In this project, researchers designed a reinforcement learning training framework on NREL's high-performance computer to facilitate efficient learning of building control policies, evaluated the trained controllers’ performance, and compared them with the state-of-the-art optimization-based building controllers.

Learn more in Grid-Interactive Multi-Zone Building Control Using Reinforcement Learning With Global-Local Policy Search.

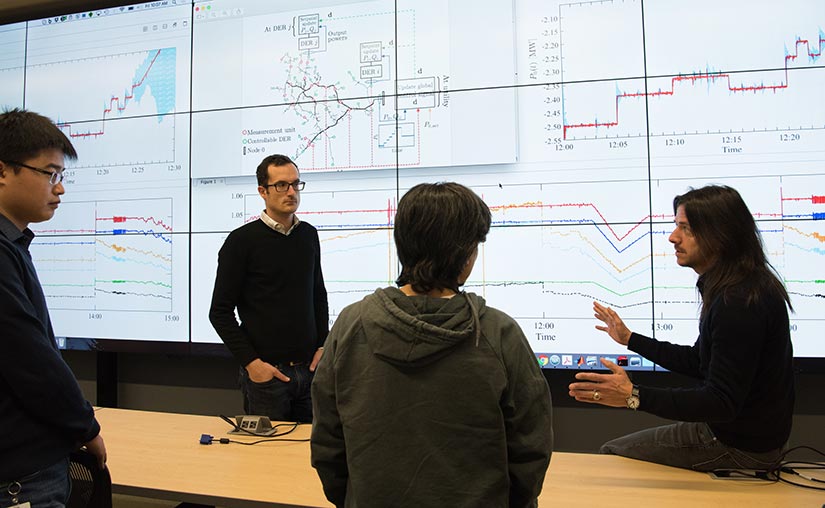

NREL researchers developed an innovative, distributed photovoltaic (PV) inverter control architecture that maximizes PV penetration while optimizing system performance and seamlessly integrating control, algorithms, and communications systems to support distribution grid operations.

The growth of PV capacity has introduced distribution system challenges. For example, reverse power flows increase the likelihood of voltages violating prescribed limits, while fast variations in PV output tend to cause transients that lead to wear-out of switchgear. Recent distributed optimization and control approaches that are inspired by—and adapted from—legacy methodologies and practices are not compatible with distribution systems with high PV penetrations and, therefore, do not address emerging efficiency, reliability, and power-quality concerns.

This project developed distributed controllers that were validated via comprehensive and tiered software-only simulation and hardware-in-the-loop simulation. Microcontroller boards were used to create and embed the optimization-centric controllers into next-generation gateways and inverters.

The objective of this project is to advance the foundational science for control and optimization of multi-energy systems by:

- Uncovering and modeling intrinsic couplings among electricity, natural gas, water,

and heating systems, with emphasis on distribution-level operational setups

- Formulating new classes of multi-energy-system optimization problems incorporating

the inter-system dependencies and formalizing well-defined optimization and control

objectives

- Addressing the solution of the formulated problems by tapping into contemporary advances in convex relaxations and distributed optimization and control.

Departing from existing approaches based on off-the-shelf black-box tools, the project produced innovative, computationally affordable distributed optimization and control schemes that are grounded on solid analytical foundations and will pave the way to the next -generation real-time control architecture for integrated energy systems.

Publications

Decentralized Low-Rank State Estimation for Power Distribution Systems, IEEE Transactions on Smart Grid (2021)

Economic Dispatch With Distributed Energy Resources: Co-Optimization of Transmission and Distribution Systems, IEEE Control Systems Letters (2020)

Distributed Minimization of the Power Generation Cost in Prosumer-Based Distribution Networks, American Control Conference (2020)

Model-Free Primal-Dual Methods for Network Optimization With Application to Real-Time Optimal Power Flow, American Control Conference (2020)

Joint Chance Constraints in AC Optimal Power Flow: Improving Bounds Through Learning, IEEE Transactions on Smart Grid (2019)

Online Optimization as a Feedback Controller: Stability and Tracking, IEEE Transactions on Control of Network Systems (2019)

Online Primal-Dual Methods With Measurement Feedback for Time-Varying Convex Optimization, IEEE Transactions on Signal Processing (2019)

Real-Time Feedback-Based Optimization of Distribution Grids: A Unified Approach, IEEE Transactions on Control of Network Systems (2019)

View all NREL publications about distributed controls and optimization.

Contact

Andrey Bernstein

Group Manager/Senior Researcher, Energy Systems Control and Optimization

andrey.bernstein@nrel.gov303-275-3912

Share